Analysis Of +546,000 AI Overviews

AI Overviews are the most significant SEO change agent since mobile – maybe ever.

Until now, we’ve lacked a representative data set to thoroughly analyze how AIOs (AI Overviews) work.

Thanks to exclusive data from Surfer, I conducted the largest analysis of AI Overviews so far with over 546,000 rows and +44 GB of data.

The data answers who, why, and how to rank in AIOs with astonishing clarity. In other cases, it raises new questions we can seek to answer and refine our understanding of how to succeed in AIOs.

The stakes are high: AIOs can lead to a significant traffic decrease of -10% (according to my first analysis), depending on citation design and user intent – and there is no escaping this.

Since the AIO pullback two weeks after the initial launch at the end of May, they’ve slowly been ramping up.

Image Credit: Lyna ™

Image Credit: Lyna ™The Data

The data set spans 546,513 rows, 44.4 GB, and over 12 million domains. There is no known exploration of a comparable dataset.

- 85% of queries and results are in English.

- 253,710 results are live (not part of SGE, Google’s beta environment), 285,000 of results are part of SGE.

- 8,297 queries show AIOs for both SGE and non-SGE.

- The data contains queries, organic results, cited domains, and AIO answers.

- The dataset was pulled in June.

Limitations:

- It’s possible that new features are not included since AIOs change all the time.

- The dataset does not yet contain languages like Portuguese or Spanish that were recently added.

I will share insights over several Memos, so stay tuned for part 2.

Answers

I sought to answer five questions in this first exploration.

- Which domains are most visible in AIOs?

- Does every AIO have citations?

- Does organic position determine AIO visibility?

- How many AIOs contain the search query?

- How different are AIOs in vs. outside of SGE?

Which Domains Are Most Visible In AIOs?

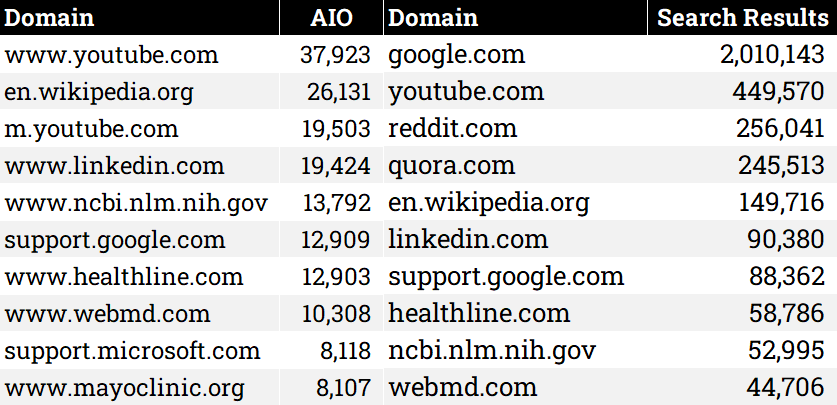

We can assume that the most cited domains also get the most traffic from AIOs.

In my previous analyses, Wikipedia and Reddit were the most cited sources. This time, we see a different picture.

The top 10 most cited domains in AIOs:

- youtube.com.

- wikipedia.com.

- linkedin.com.

- NIH (National Library of Medicine).

- support.google.com.

- healthline.com.

- webmd.com.

- support.microsoft.com.

- mayoclinic.org.

The top 10 best-ranking domains in classic search results:

- www.google.com.

- www.youtube.com.

- www.reddit.com.

- www.quora.com.

- en.wikipedia.org.

- www.linkedin.com.

- support.google.com.

- www.healthline.com.

- www.ncbi.nlm.nih.gov.

- www.webmd.com.

The biggest difference? Reddit, Quora, and Google are completely underrepresented in AIO citations, which is completely counterintuitive and against trends we’ve seen in the past. I found only a few AIO citations for the three domains:

- Reddit: 130.

- Quora: 398.

- Google: 612.

Did Google make a conscious change here?

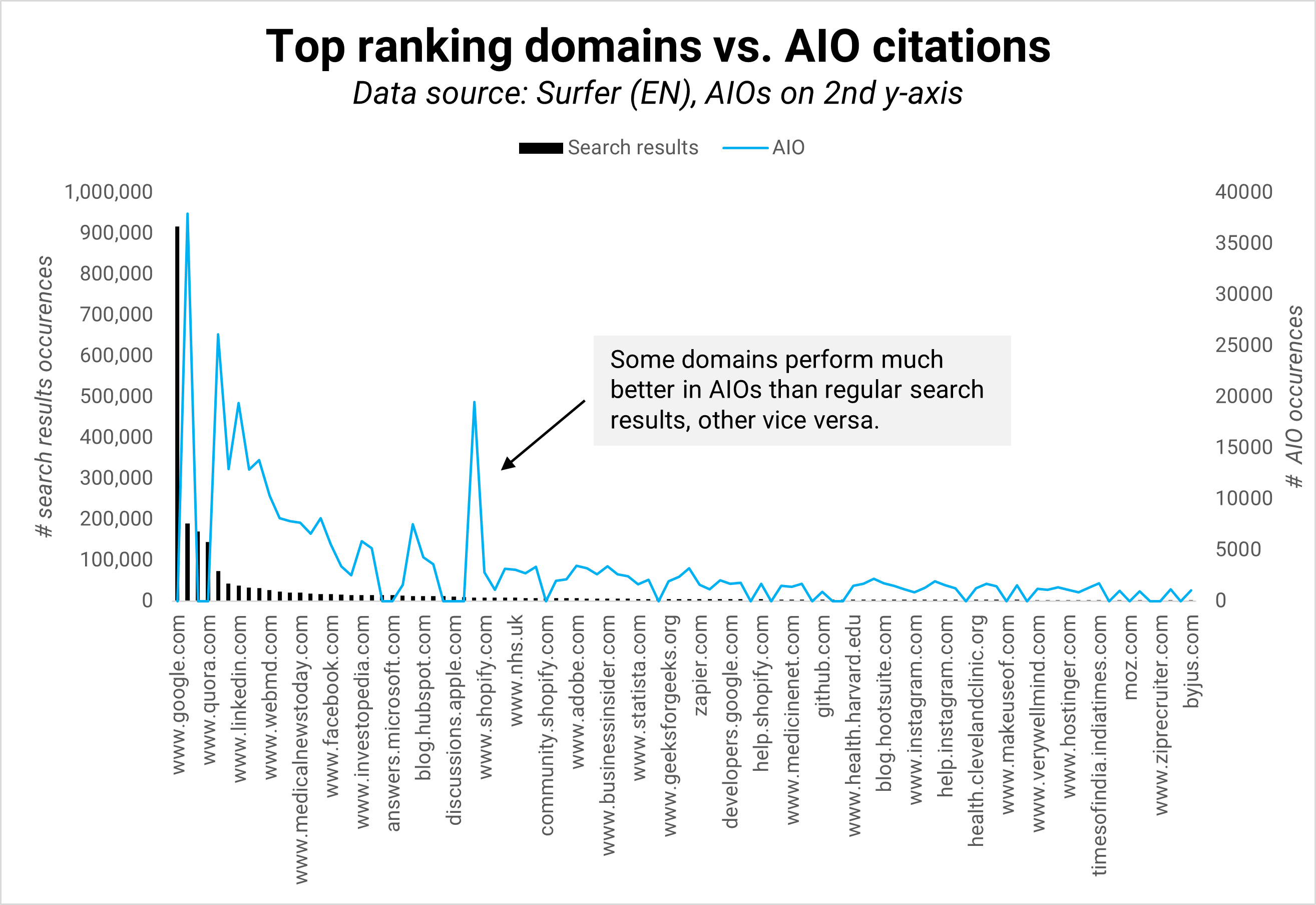

We can see that AIOs can show vast differences between cited URLs and ranking URLs in classic search results.

The fact that two social networks, YouTube and LinkedIn, are in the top three most cited domains raises the question of whether we can influence AIO answers with content on YouTube and LinkedIn more than our own.

Videos take more effort to produce than LinkedIn answers, but they might also be more defensible against copycats. AIO-optimization strategies should include social and video content.

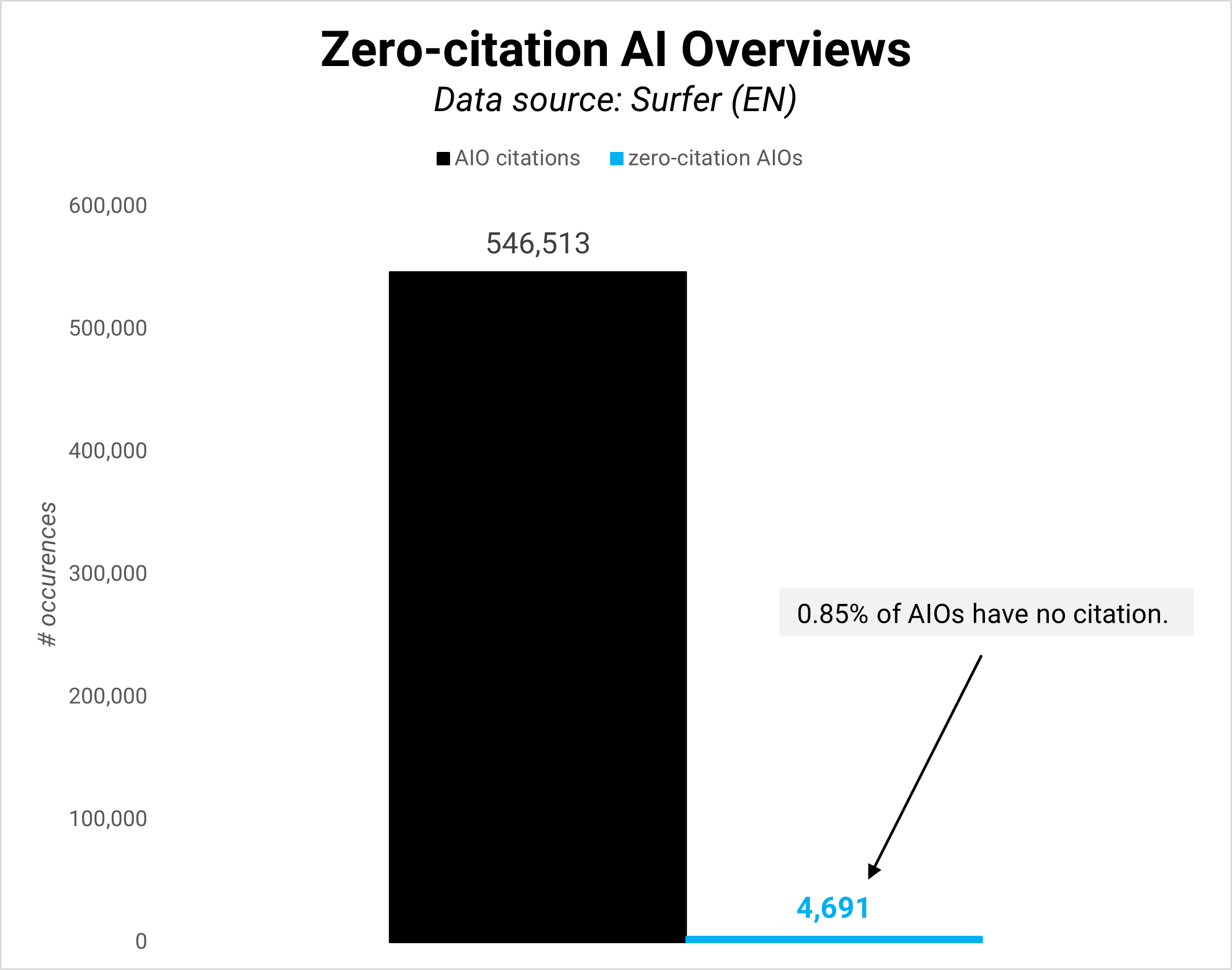

Does Every AIO Have Citations?

We assume every AIO has citations, but that’s not always the case.

Queries with very simple user intent, like “What is a meta description for an article?” or “Is 1.5 a whole number?” don’t show any citations.

I counted 4,691 zero-citation queries (0.85%) in the data set – less than 1% (0.85%).

It’s questionable how valuable this traffic would have been in the first place.

However, the fact that Google is willing to display AI answers without citations raises the question of whether we’ll also see more complex and valuable queries without sources.

The impact would be devastating, as citations are the only way to get clicks from AIOs.

Does Organic Position Determine AIO Visibility?

Lately, more data came out showing a high overlap between pages cited in AIOs and pages ranking in the top spots for the same query.

The underlying question is: Do you need to do anything different to optimize for AIOs than for classic search results?

Early on, Google would cite URLs in AIOs that don’t rank in the top 10 results. Some would even come from penalized or non-indexed domains.

The concern was that a system would pick citations far removed from classic search results ranking, making it hard to optimize for AIOs and leading to questionable answers.

Over the last one to two months, that trend seems to have changed, but the data does not indicate a turnaround.

I found:

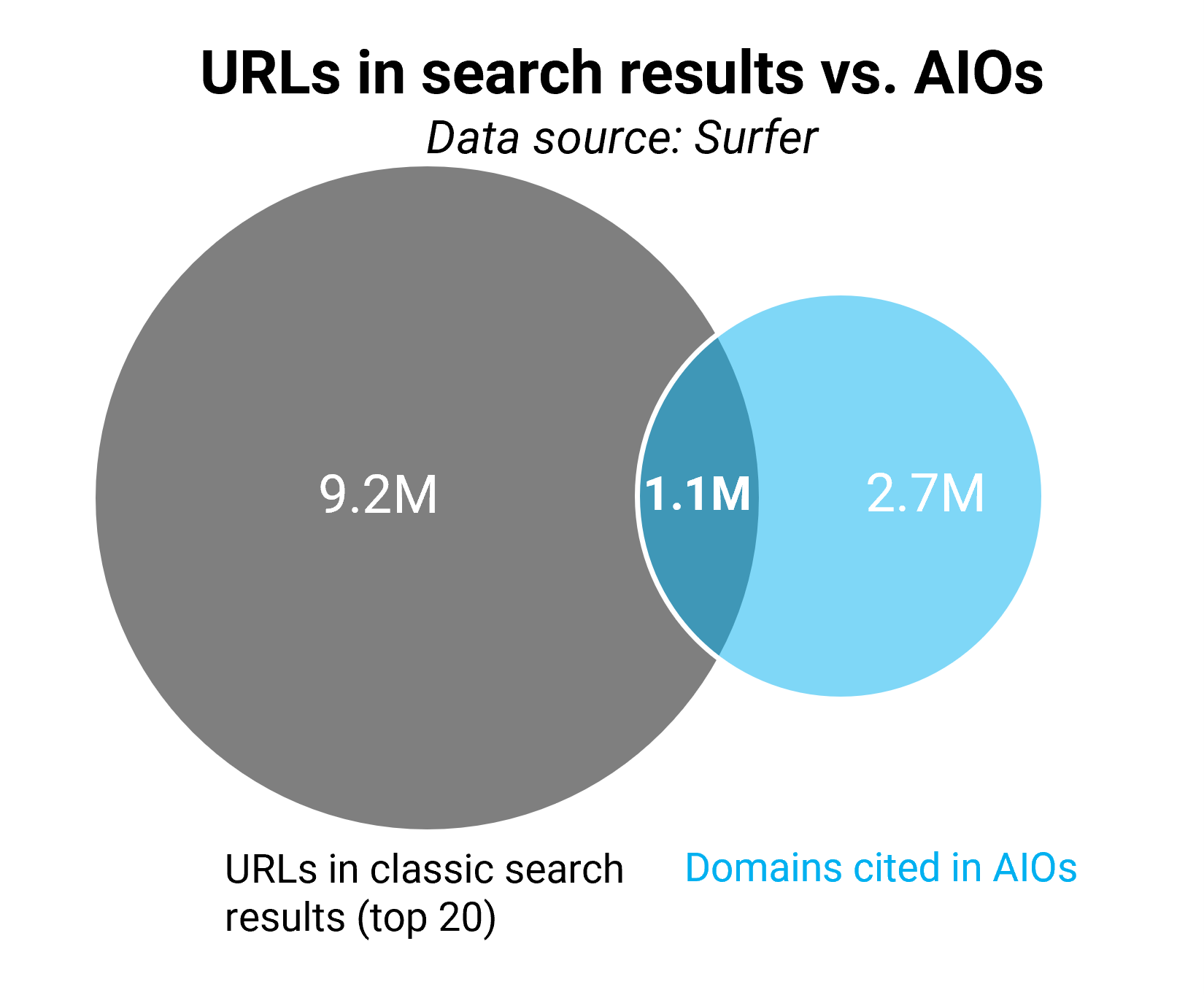

- 9.2 million total unique URLs in the top 20 search results.

- 2.7 million total URLs in AIO citations.

- 1.1 million unique URLs in both the top 20 search result positions and as AIO citations.

12.1% of URLs in the top 20 search results are also AIO citations. In reverse, 59.6% of AIO citations are not from the top 20 search results.

The observation is supported by a Google patent showing how links are selected after summarization and weak correlations between search results rank and AIO citations: -0.19 in total and -0.21 for the top 3 search results.

Ranking higher in the search results certainly increases the chances of being visible in AIOs, but it’s by far not the only factor. Google aims for more diversity in AIO citations.

In the search results, URLs rank for ~15.7 keywords on average, no matter whether they’re in or out of the top 10 positions. In AIO citations, it’s almost exactly half: 8.7x.

As a result, a larger number of sites can get clicks from AIOs. However, more diversity is offset by fewer URLs cited in AIOs and fewer outgoing clickers due to more in-depth answers. A tad over 12 million URLs appear in search results compared to 2.7 million in AIOs (23.1%).

How Many AIOs Contain The Search Query?

It’s unclear whether AIO answers contain the search query. Since queries really represent user intent, which is implied rather than explicit, it’s possible that they don’t.

As a result, tailoring content too much to the explicit query and missing intent could lead Google to not pick it as a citation or source for AI answers.

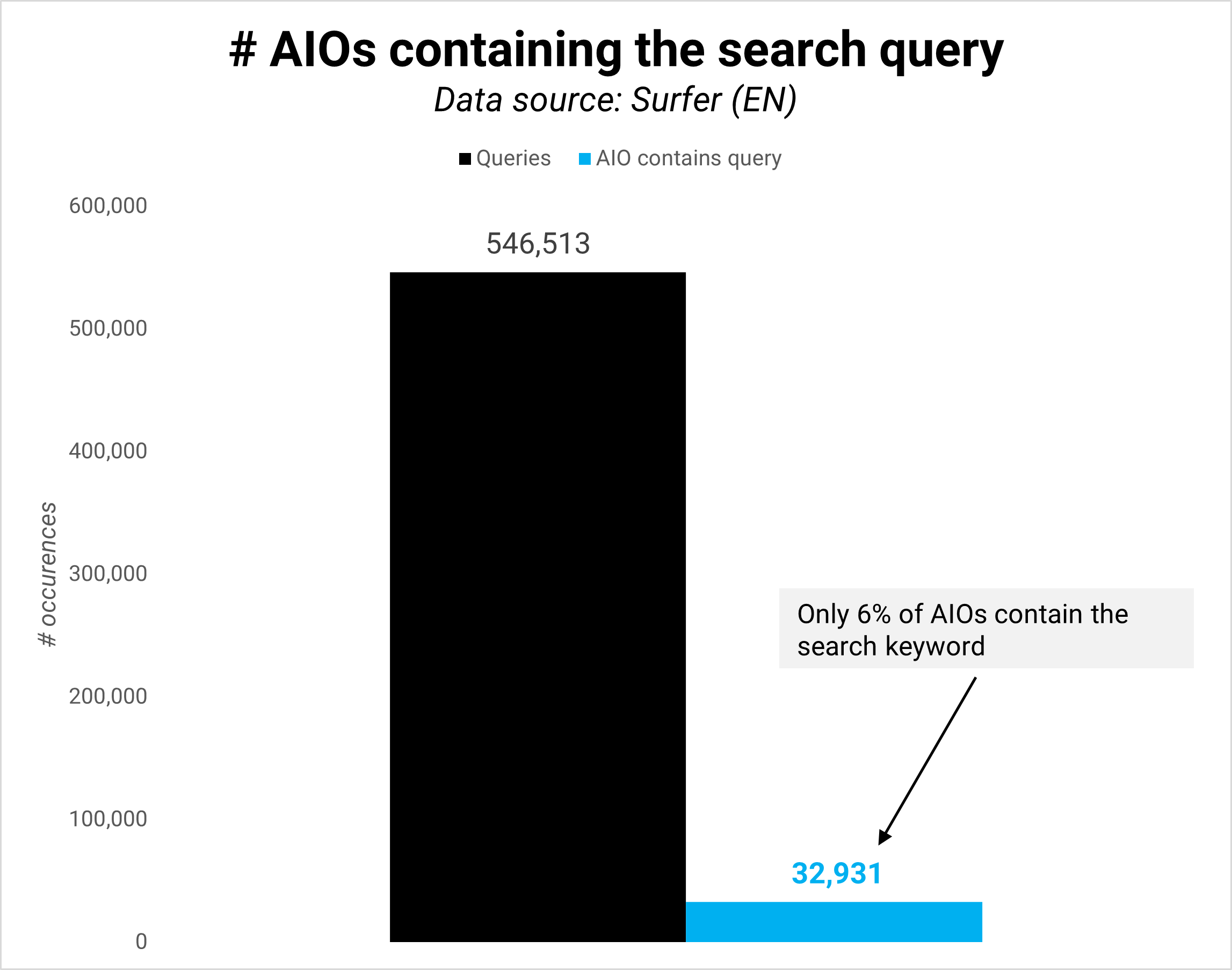

The data shows that only 6% of AIOs contain the search query.

That number is slightly higher in SGE, at 7%, and lower in live AIOs, at 5.1%.

As a result, meeting user intent in the content is much more important than we might have assumed.

This should not come as a surprise since user intent has been a key ranking requirement in SEO for many years, but seeing the data is shocking.

How Different Are AIOs In Vs. Outside Of SGE?

SGE is Google’s beta testing environment for new Search features. It is not, as commonly mistaken, equal to AI Overviews.

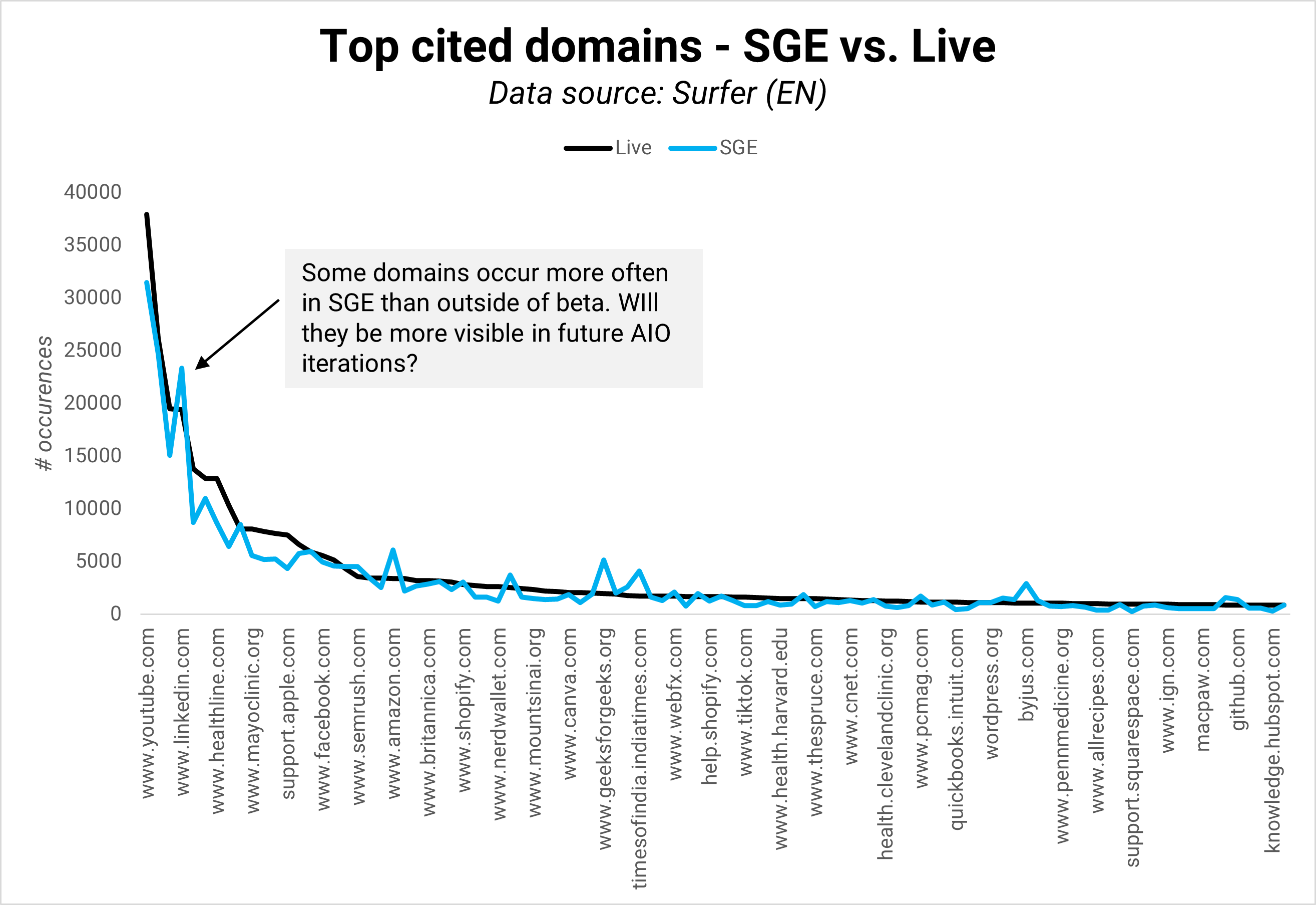

Since Google has experimented with new AI features in SGE, the question arises of how different AIOs are in vs. out of SGE. Can we learn anything from AIOs in SGE about what’s to come?

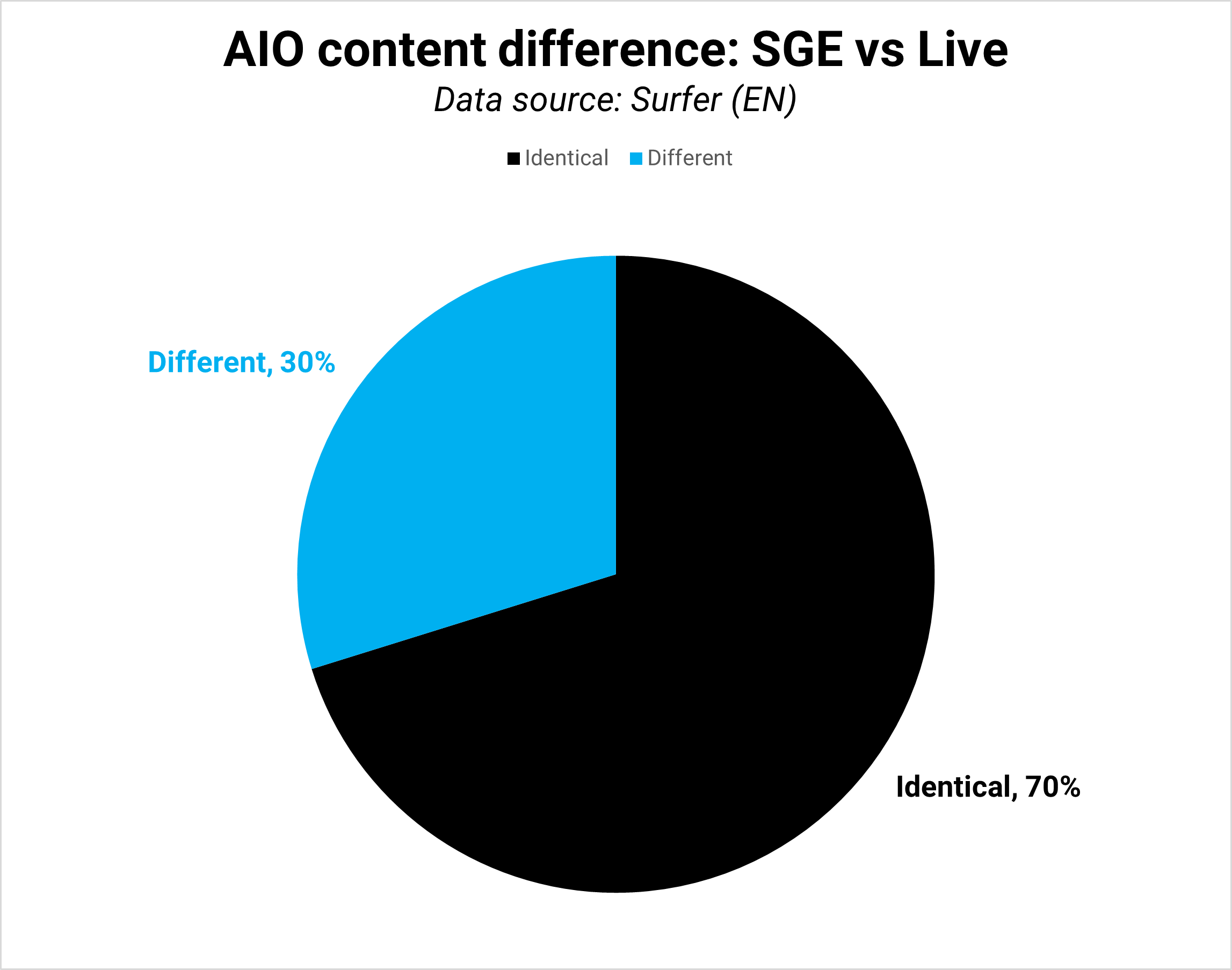

I looked at over 8,000 AIOs in and outside of SGE and found that 30% of AIOs have very different content in SGE compared to live. SGE results are likely not an indicator of what’s to come, at least at this point.

The length of SGE vs. live AIOs varies but is the same on average: 1,019 in SGE vs. 996 live.

For example, the AIO for the search query “Marketing manager” has 347 characters in SGE vs. 1,473 live.

But most AIO answers look like “P&L,” which has 1,188 in SGE and 1,124 in the live results.

We cannot conclude that SGE results (and the potential future of AIOs) are longer (more succinct) or shorter (more detailed). I will analyze the results further.

On the domain level, the following 10 domains would see the biggest relative visibility increases if SGE was a predictor of future performance:

- byjus.com.

- geeksforgeeks.org.

- timesofindia.indiatimes.com.

- amazon.com.

- ahrefs.com.

- github.com.

- medium.com.

- pcmag.com.

- techtarget.com.

- coursera.org.

The top 10 domains that would be set to lose the most relative AIO visibility are:

- support.squarespace.com.

- knowledge.hubspot.com.

- quickbooks.intuit.com.

- allrecipes.com.

- bhg.com.

- bankrate.com.

- cnbc.com.

- nerdwallet.com.

- thespruce.com.

- tiktok.com.

Meaning

All of this means three things:

1. Optimizing for AI Overviews is similar to Featured Snippets with the difference of being more user-intent focused.

Featured Snippet-optimization is very exact match-driven – you need to match the question and clearly indicate that the answer relates to the question. Not for AIOs.

For AIOs, we can tweak our content to match the AIO answer or give a better one, but reflecting “useful” information in the search query context is much more important than the exact wording.

Three challenges stand in the way:

- Understand and target what sections appear in AIOs, like lists, comparisons, “what is…” or “how to…” explanations, etc.

- Keep track of AIOs since they tend to change fairly often, which means we must adjust our content and impact expectations accordingly. Just recently, Google started testing a sidebar with links instead of a carousel.

- Rank in the top 10 positions, ideally top 3, for a query is not a pre-requisite but increases your chances.

2. SGE is useful for monitoring potential AIO design changes but not to predict how AIO answers might change. One threat to keep an eye on is citation-less AIOs.

3. Social could make a comeback! Many years ago, social signals were hyped as SEO ranking factors. Today, the strong prominence of social networks like YouTube and LinkedIn in citations offers an opportunity to impact AIOs with social and video content.

Thinking Ahead

AIOs do the opposite of leveling the playing field. They create an imbalance where a few sites that get cited get more visibility than everyone else.

However, they also shrink the playing field by answering user questions better and more often than Featured Snippets.

The risk of getting fewer clicks grows with better AIO answers – but there is also the risk of fewer ad clicks. Organic and paid results always existed in balance. The quality of one impacts the other. Unless Google embeds new ad modules – which is likely – better organic answers will come at the cost of ad revenue.

At the same time, Google is pulled forward from competitors like OpenAI and Perplexity, which constantly ship better models and increase the chance of searchers not using Google for answers. It will be hard for Google not to iterate and innovate on AI in the search results.

Differences in AIO design might arise between the EU and non-EU countries. New regulations and fines will lower the appetite for tech companies like Alphabet, Meta, or Apple to launch AI features in the EU.

The result could be two internets that allow us to compare the impact and changing AI landscape in countries like the US.

Stay tuned for round two.

Boost your skills with Growth Memo’s weekly expert insights. Subscribe for Free!

Google AI Overview Study: Link Selection Based on Related Queries

New ways to connect to the web with AI Overviews

Featured Image: Paulo Bobita/Search Engine Journal

Post Comment